Shaders Explained: Gradients

Write functional and performant shaders is the basic skill for GPU developer no matter what framework or engine he or she uses. But there are not much information about it on the web especially if we talk about Metal. Though shading languages are pretty similar and hours spent on ShaderToy can give you some inspiration and knowledge about OpenGL shaders and you can easily convert them to Metal I personally feel a huge lack of structured information about shader writing. So in this article and others under the title Shaders Explained I’ll go through different shaders in detail, teach you some shader technics and explain my motivation under each line of code. Hope that it will make you and me better shader programmers.

For the first article I chose a pretty basic gradient shader which I implemented for one of my personal projects. Gradients are at the very heart of GPU programming and every shader tutorial starts with the triangle colored with gradient from red, green and blue. But we won’t build yet another Hello Triangle tutorial. Instead I’ll show you how to make gradient shader to fill texture with arbitrary gradient stops with different locations and colors for three basic gradient types. Additionally we’ll add support for rotation. Actually we will implement the most part of CAGradientLayer or SwiftUI Gradient from scratch with broad focus on its GPU side.

CPU

Draw a gradient in iOS is pretty simple. All the available implementations have the similar API. You define an array of gradient stops which contains location in normalized 1D coordinate space (from 0 to 1) and a color. CAGradientLayer asks for separate arrays with locations and colors and SwiftUI uses Gradient.Stop struct with CGFloat location and SwiftUI Color for it. But we won’t use SwiftUI color or CGColor or other UI related colors available in iOS since they are not available on GPU and Metal Shading Language does know nothing about them. Instead we will use array of four Float values packed in a single SIMD register because Metal has the same type float4. Also our colors need to be defined in 0 to 1 range for each channel and not from 0 to 255. Metal has support for unsigned integers for color definition but the common practice is to use floating points.

struct GradientStop {

let location: Float

let color: SIMD4<Float>

}Colors between gradient stops are interpolated linearly. So in the middle between stop with location 0 and opaque black color (0, 0, 0, 1) and the next stop with location 1 and white color (1, 1, 1, 1) there will be gray color with RGBA values 0.5, 0.5, 0.5, 1. Besides stops we need support for three types of gradients and rotation angle so the whole gradient structure will look like this.

struct Gradient {

enum GradientType: UInt8 {

case linear

case radial

case angular

}

struct GradientStop {

let location: Float

let color: SIMD4<Float>

}

let type: GradientType

let stops: [GradientStop]

let rotationAngle: Float

}Instead of rotation angle we can add support for the full range of transform matrices with scale and translation but this is outside of our topic for now. I won’t cover the full CPU side implementation here with render pipeline creation and encoding because it is highly dependent on how you work with Metal in your app. Also it would require to explain the huge part of Metal’s Swift API. You can find it in Metal by Example blog or Ray Wenderlich’s Metal by Tutorials. And I want to focus on shader implementation here.

GPU

To make a gradient fill on GPU we need to write two render shaders. The first one will use stops and produce an actual gradient on 1D texture (texture with the height of 1 pixel) and the second will stretch it to final texture according to gradient type and rotation angle. The main problem which took me quite a while to solve is how to wrap a gradient to the final texture differently based on type.

But first things first. Lets write the gradient shader.

1D Gradient Texture

struct GradientTextureVertex {

float4 position [[ position ]];

float4 color;

};

[[ vertex ]]

GradientTextureVertex gradientTextureVertex(constant float* positions [[ buffer(0) ]],

constant float4* colors [[ buffer(1) ]],

const ushort vid [[ vertex_id ]]) {

return {

.position = float4(fma(positions[vid], 2.0f, -1.0f), 0.0f, 0.0f, 1.0f),

.color = colors[vid]

};

}

[[ fragment ]]

float4 gradientTextureFragment(GradientTextureVertex in [[ stage_in ]]) {

return in.color;

}For those of you familiar with Metal Shading Language this shader doesn’t require further explanations. For Metal newcomers I’ll briefly explain some details. But if this article is the first about Metal for you I highly recommend to read first two parts of Up and Running with Metal from Warren Moore here and here before continue. It will give you Metal basics required to understand what is happening here. Also he has the best book about Metal available so check it out.

[[ vertex ]] and [[ fragment ]] modifiers tell the compiler what these functions are for. The first one will be called as many times as you asked in render encoder’s drawPrimitives function. Usually once per vertex in your scene geometry but in our case it will be called once per gradient stop. Vertex function has to return something with vertex position in homogeneous coordinate space. It can be only float4 value or any other struct with position in it. You need to tell the compiler where position is placed in this struct using [[ position ]] modifier.

All the values passed from Swift code to GPU shader need to be packed in Metal buffers. They are just boxes for raw data without any type information. It is your responsibility to put properly aligned data in buffers and use the corresponding type in shaders. If you put UInt8 in buffer but shader expects ushort you will have a crash. In vise versa situation shader will run but you won’t get the expected result and these types of bugs are really hard to find and debug, so be careful. In our shader we pass all the stops’ locations in the first buffer with index 0 and all the colors in second one. But since our locations are in range from 0 to 1 we need to make two additional modifications to them.

First we need values for Y, Z and W in homogeneous coordinates. We use zero for Y because we render texture with one pixel height and zero for Z because there is no depth in our scene, we just render in 2D. W needs to be one because rasterizer which converts geometry data to pixels on screen will divide XYZ by W before passing the final values to fragment function. You definitely need to understand this concept when you’ll write shaders for 3D but in our case it is not required.

Second step is to convert normalized 0...1 coordinate space to Metal clip space where X and Y axes has range from -1 to 1 and Z axis from 0 to 1. We use fma function for it which is a shortcut for Fused Multiply-Add. It has three parameters (x, y, z) and returns x * y + z. Of course we can use positions[vid] * 2.0f - 1.0f instead of fma but fma makes it in one processor instruction with a single rounding after the whole operation.

Fragment function will be called once per pixel in rendered texture. In our case we just return the color associated with that pixel. The value returned from vertex function will be passed to fragment function in [[ stage_in ]] parameter. The magic is happening between these two functions. Metal rasterizer will interpolate all the colors and pass the interpolated value to each fragment function call. That means if we have two gradient stops with locations 0 and 1 and black and white colors respectively and render them to texture with 100 pixels width and 1 pixel height fragment function will be called 100 times and in each call the color will be interpolated between float4(0, 0, 0, 1) and float4(1, 1, 1, 1). On the 10th pixel the color passed to fragment function will have value float4(0.1, 0.1, 0.1, 1), on 50th the value will be 0.5 for RGB channels and so forth. As you see we don’t need to make interpolation by ourself, GPU will do it faster and with less errors :)

After this step we have a shiny new 1D texture with gradient properly colored according to stops. Lets wrap it to the final texture.

2D Gradient Texture

All three types of gradient which our shader will support follow the same implementation pattern. We will use UV coordinates to wrap gradient to the final texture and apply rotation to UV while keeping position in place. I’ll explain later why. In fragment shader we will use sampler with clamp_to_edge address mode and all we need to do is to properly convert UV to position on 1D gradient texture according to gradient type.

struct GradientVertex {

float4 position [[ position ]];

float2 uv;

};

enum GradientType: uchar {

kLinear,

kRadial,

kAngular

};

constant float2 positions[4] {

{ -1.0f, 1.0f },

{ -1.0f, -1.0f },

{ 1.0f, 1.0f },

{ 1.0f, -1.0f }

};

constant float2 uvs[4] {

{ 0.0f, 0.0f },

{ 0.0f, 1.0f },

{ 1.0f, 0.0f },

{ 1.0f, 1.0f }

};

[[ vertex ]]

GradientVertex gradientVertex(constant float2x2& rotationTransform [[ buffer(0) ]],

const ushort vid [[ vertex_id ]]) {

return {

.position = float4(positions[vid], 0.0f, 1.0f),

.uv = (uvs[vid] - float2(0.5f)) * rotationTransform + float2(0.5f)

};

}

[[ fragment ]]

float4 gradientFragment(GradientVertex in [[ stage_in ]],

texture2d<float, access::sample> gradientTexture [[ texture(0) ]],

constant GradientType& gradientType [[ buffer(0) ]]) {

constexpr sampler s(filter::linear, coord::normalized, address::clamp_to_edge);

float2 texCoords;

switch (gradientType) {

case kLinear:

// TODO: Assign `texCoords` for Linear

break;

case kRadial:

// TODO: Assign `texCoords` for Radial

break;

case kAngular:

// TODO: Assign `texCoords` for Angular

break;

}

return gradientTexture.sample(s, texCoords);

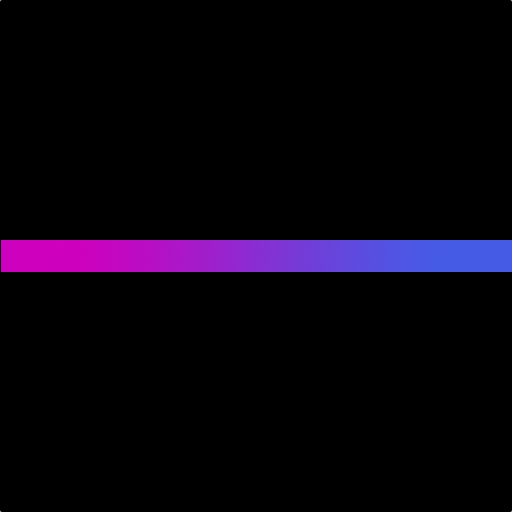

}Why we can’t use just position and need separate UV coordinates here? The reason is not only because positions are in -1...1 range and UVs are in 0...1 range. If it was the only issue we could just use the same fma function to convert values. Actually positions in fragment shader will be with absolute values. Since it runs once per pixel you’ll have the position of that pixel during execution. The second reason is that pixels outside of our rotated geometry won’t be rendered. Here on the first image we rotate vertex positions and on the second UVs. Also since rotation is performed from center of coordinates we need to subtract 0.5f from UVs first, perform rotation and add it back again. Without this shift UVs will be rotated around top left corner.

So we create proper geometry in vertex shader, let’s move to fragment. By now we have only 1D texture with gradient and we need to stretch it to final texture no matter what size it will have. Here is how we will stretch it based on gradient type for linear, radial and angle gradients respectively. The colored line is our 1 pixel height gradient texture, I made it larger for illustration purposes.

For linear gradient it’s pretty straightforward, we just assign UV to texCoords. Because of clamp_to_edge address mode for all the UVs outside 0...1 range sampler will return closest edge value. It is not an issue without rotation since all the pixels on final texture will have UVs in proper range but if we apply rotation UVs on corners will have values below zero or above one. With edge clamping every pixel will be filled with proper color.

texCoords = in.uv;Radial gradient runs uniformly from the center so we need to calculate how far we are from it in current pixel. Since UVs are normalized the center is always at float2(0.5f, 0.5f). To find the distance between two points we need to put the center of coordinates to the second one by subtracting the second from first and calculate vector length. Inside the gradient circle this length will be from 0 to 0.5 so we need to multiply it by 2 to receive the full 0...1 range for sampling. And you already understand that pixels outside of the circle will be filled with the color from last pixel of gradient texture because of clamping.

texCoords = length(in.uv - float2(0.5f)) * 2.0f;With angular gradient things are a bit more complicated. We need to calculate an angle between two vectors. The first one will be the same vector from center of texture to current pixel and the second one will be the horizontal vector with positive X direction. When we will find the angle we need to normalize it and take the sample from gradient texture with this normalized position. To find an angle there is a popular atan2 function. You may use it in CoreGraphics while manipulating with CGPoints or in any other graphics frameworks. atan2 returns value in -PI...PI range and normalization is done by dividing by PI and our old friend fma.

const float2 offsetUV = in.uv - float2(0.5f);

const float angle = atan2(offsetUV.y, offsetUV.x);

texCoords = float2(fma(angle / PI, 0.5f, 0.5f), 0.0f);And that’s it! You’re now fully prepared to implement CAGradientLayer or SwiftUI Gradient from CPU API up to GPU rendering. Here is the final Gist with the full shader implementation. If you have any questions feel free to shoot me an email to contact@mtldoc.com or connect via Twitter @petertretyakov.

And one more thing :) Besides gradient shader I’ve also made this nice Gradient builder purely on SwiftUI. I covered it in the separate post here. Also you can find a link to demo app there with full implementation of this shader.